Israel’s Sick Sense of Humour

With all the talk about a ceasefire in Lebanon, the following news about AI (artificial intelligence) may have got lost. The Israelis and their supporters like to laugh about the thousands left severely injured or blinded and the dozens killed in Lebanon in late September when Israel detonated tens of thousands of pagers at one time. Quite a joke! Or when Israel programmed implosions of thousands of walkie-talkies which killed 20 and seriously injured another 450 in Lebanon. Israel suspected the pagers and walkie-talkies belonged to Hezbollah ‘militants’. So were the killings and maimings justified? Really?

And who are the ‘militants’? For example, 7% of Israelis are in the reserves (compared to only 0.5% of US citizens in their own country) and are required to do military service every year. Are the reservists ‘militants’? No one calls Israeli fighters that – but they are. Who says who is a ‘militant’ – obviously the victors in any struggle get to call whom they like ‘militants’?

But were children with pagers in their backpacks, hospital doctors with pagers in their pockets, politicians who had pagers, men on construction sites with walkie-talkies – were they all, ‘militants’? Israel would have us believe they were. But there were thousands of civilian victims of the pager and the walkie-talkie explosions. The Israelis wanted to show us all how clever they were – they can create war and death. Yet they use gallows’ humour to justify their reliance on AI to simultaneously blind and cause children’s and adults’ fingers to be amputated.

Who is a ‘Militant’ in Gaza?

Is everyone that Israel targets a ‘militant’? Clearly not. We see that from what happened with the utter destruction of Gaza. Yet Israel insists that every male in Gaza is a ‘militant’ and a target for death. And what about his family? Are they ‘militants’ too – from babies to school children to the elderly all targets for death? Killing them amounts to war crimes. To kill 100 civilians in an effort to kill one man – does not mean the man is a ‘militant’. Maybe he is just a father or grandfather – killing him is a war crime. If Israel targets any and all men for death, that too is a war crime.

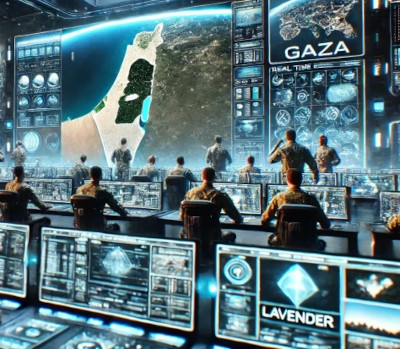

Israel’s war on Gaza, is aided and abetted by AI (artificial intelligence). AI powers three different programs (Lavender, The Gospel, Where’s Daddy) that generate and pinpoint targets for assassination in Gaza.

Right after 7 Oct 2023, Lavender targeted 37,000 Palestinians as suspected ‘militants’ and targeted them and their homes and families for potential air strikes.

But as far as Lavender goes, a member of the Israeli military noted, there is a major problem, “if the [Hamas] target gave [his phone] to his son, his older brother, or just a random man. That person will be bombed in his house with his family. This happened often…” These are called ‘errors’. At least 10% of Palestinians killed in air strikes are ‘errors’ – because Lavender is only supposed to target men (as they are assumed to be the ‘militants’), and sometimes Lavender marks people as targets who have no connection or very limited connection with ‘militants’.

In the early weeks of Israel’s war on Gaza, to kill any junior [Hamas] ‘militant’ the higher ups in the IDF (Israel Defense Forces) said it was acceptable to also kill 15-20 civilians. If the target was believed to be a Palestinian ‘leader’, Israel saw nothing wrong with killing 100 people to murder one ‘leader’.

While Lavender distinguishes individual people, another AI tool, The Gospel, marks buildings, hospitals and shelters for annihilation.

Dumb Bombs vs Smart Bombs

The Israeli army used “dumb bombs” (as opposed to “smart” precision bombs) to destroy entire buildings that collapsed on top of residents and cause terrible injuries and death. As one Israeli officer commented about the use of “dumb bombs”, “You don’t want to waste expensive bombs on unimportant people – it’s very expensive.” According to +972,

“the implication, one source explained, was that the army would not strike a junior target if they lived in a high-rise building, because the army did not want to spend a more precise and expensive ‘floor bomb’ (with more limited collateral effect) to kill him. But if a junior target lived in a building with only a few floors, the army was authorized to kill him and everyone in the building with a dumb bomb.”

The Lavender system is so deadly that Israel killed 15,000 people in the first six weeks after 7 Oct. 2023 – that is one third of the total death toll up to Oct. 2024.

Despite Israel’s devastating attacks, when the number of dead started to wane, one IDF officer said he “added 1200 new targets to the tracking system… and such decisions were not made at high levels.”

The IDF conflated Lavender and Where’s Daddy in order to kill entire families, courtesy of the tracking system to homes (or now what is left of them). As one officer noted, “Let’s say you calculate that there is one Hamas operative plus 10 civilians in the house, usually, these ten will be women and children. So absurdly, … most of the people you killed were women and children.”

Where’s Daddy?

This is how it happens: Where’s Daddy is alerted when the man, the so-called ‘militant’, has entered his house, but there is often a delay in launching the bomb. So if the ‘militant’ may leave the house even briefly, during that time the whole family is killed. When he comes back there is nothing and no one left, and the building is destroyed.

Of course the above AI programs worked well for the first months of Israel’s war on Gaza. Both Lavender and Where’s Daddy may still be in use. Using this technology, Israel has already destroyed more than a quarter of a million homes, displacing more than 1.9 million Gazans. Most Palestinians are surviving on the streets or sleeping in the ruins of buildings. More than 45,000 Palestinians are dead, including 17,400 children.

Israel’s targeting of Palestinians continues with new systems and new AI. In the past several months, Israel has deployed manned drones called quadcopters, that drop explosive bombs and “shoot to kill” even more Palestinians. The Israeli army uses these remotely-controlled drones to spy on Gazans, to scare civilians with loud noise and to commit extra-judicial executions. These drones can be used as snipers, and as killing machines. The quadcopters are programmed to enter narrow alleys or cramped ruins where Palestinians now have to live.

Meanwhile, the Israeli army continues to kill Palestinians on an “industrial” scale by targeting residential areas with artillery, aerial strikes and AI missiles. •

This article first published on the Judy Haiven website.